Sensitivity analysis and design optimization: Difference between revisions

| (37 intermediate revisions by 2 users not shown) | |||

| Line 13: | Line 13: | ||

=Introduction= |

=Introduction= |

||

Optimization and sensitivity analysis are key aspects of successful process design. Optimizing a process maximizes project value and plant performance, minimizes project cost, and facilitates the selection of the best components |

Optimization and sensitivity analysis are key aspects of successful process design. Optimizing a process maximizes project value and plant performance, minimizes project cost, and facilitates the selection of the best components (Towler and Sinnott, 2013). |

||

=Design Optimization= |

=Design Optimization= |

||

| Line 26: | Line 26: | ||

Although profitability or cost is generally the basis for optimization, practical and intangible factors usually need to be included as well in the final investment decision. Such factors are often difficult or impossible to quantify, and so decision maker judgment must weigh such factors in the final analysis |

Although profitability or cost is generally the basis for optimization, practical and intangible factors usually need to be included as well in the final investment decision. Such factors are often difficult or impossible to quantify, and so decision maker judgment must weigh such factors in the final analysis (Peters and Timmerhaus, 2003; Ulrich, 1984). |

||

=Defining the Optimization Problem and Objective Function= |

=Defining the Optimization Problem and Objective Function= |

||

In optimization, we seek to maximize or minimize a quantity called the goodness of design or objective function, which can be written as a mathematical function of a finite number of variables called the decision variables. |

In optimization, we seek to maximize or minimize a quantity called the goodness of design or objective function, which can be written as a mathematical function of a finite number of variables called the decision variables. |

||

<math> z=f(x_1, |

<math> z = f(x_1 , x_2 , ... x_n) </math> |

||

The decision variables may be independent or they may be related via constraint equations. Examples of process variables include operating conditions such as temperature and pressure, and equipment specifications such as the number of trays in a distillation column. The conventional name and strategy of this optimization method varies between texts; Turton suggests creating a base case prior to defining the objective function and Seider classifies the objective function as a piece of a nonlinear program (NLP) |

The decision variables may be independent or they may be related via constraint equations. Examples of process variables include operating conditions such as temperature and pressure, and equipment specifications such as the number of trays in a distillation column. The conventional name and strategy of this optimization method varies between texts; Turton et al suggests creating a base case prior to defining the objective function and Seider et al classifies the objective function as a piece of a nonlinear program (NLP) (Seider et al., 2004; Turton et al., 2012). |

||

A second type of process variable is the dependent variable; a group of variables influenced by process constraints. Common examples of process constraints include process operability limits, reaction chemical species dependence, and product purity and production rate. Towler & Sinnott define equality and inequality constraints |

A second type of process variable is the dependent variable; a group of variables influenced by process constraints. Common examples of process constraints include process operability limits, reaction chemical species dependence, and product purity and production rate. Towler & Sinnott define equality and inequality constraints (Towler, 2012). Equality constraints are the laws of physics and chemistry, design equations, and mass/energy balances: |

||

<math> h(x_1, |

<math> h(x_1 , x_2 , ... x_n ) = b_1 </math> |

||

For example, a distillation column that is modeled with stages assumed to be in phase equilibrium often has several hundred MESH (material balance, equilibrium, summation of mole fractions, and heat balance) equations. However, in the implementation of most simulators, these equations are solved for each process unit, given equipment parameters and steam variables. Hence, when using these simulators, the equality constraints for the process units are not shown explicitly in the nonlinear program. Given values for the design variables, the simulators call upon these subroutines to solve the appropriate equations and obtain the unknowns that are needed to perform the optimization |

For example, a distillation column that is modeled with stages assumed to be in phase equilibrium often has several hundred MESH (material balance, equilibrium, summation of mole fractions, and heat balance) equations. However, in the implementation of most simulators, these equations are solved for each process unit, given equipment parameters and steam variables. Hence, when using these simulators, the equality constraints for the process units are not shown explicitly in the nonlinear program. Given values for the design variables, the simulators call upon these subroutines to solve the appropriate equations and obtain the unknowns that are needed to perform the optimization (Seider et al., 2004). |

||

Inequality constraints are technical, safety, and legal limits, economic and current market: |

Inequality constraints are technical, safety, and legal limits, economic and current market: |

||

<math> g(x_1, |

<math> g(x_1 , x_2 , ... x_n ) \frac {\geq} {\leq} b_2 </math> |

||

Inequality |

Inequality constraints also pertain to equipment; for example, when operating a centrifugal pump, the head developed is inversely related to the throughput. Hence, as the flow rate is varied when optimizing the process, care must be taken to make sure that the required pressure increase does not exceed that available from the pump (Seider et al., 2004). |

||

It is important that a problem is not under or over-constrained so a possible solution is attainable. A degree-of-freedom (DOF) analysis should be completed to simply the number of process variables, and determine if the system is properly specified. |

It is important that a problem is not under or over-constrained so a possible solution is attainable. A degree-of-freedom (DOF) analysis should be completed to simply the number of process variables, and determine if the system is properly specified. |

||

| Line 55: | Line 55: | ||

[[File:SAO1.PNG|center|600px]] |

[[File:SAO1.PNG|center|600px]] |

||

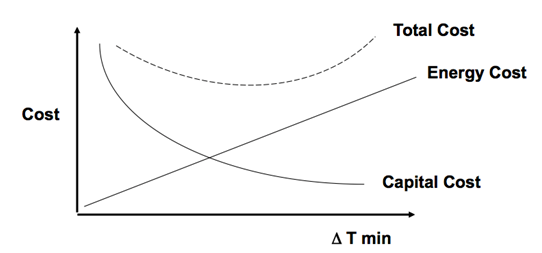

Figure 1: Trade-off example (Towler and Sinnott, 2013) |

|||

Some common design trade-offs are more separations equipment versus low product purity, more recycle costs versus increased feed use and increased waste, more heat recovery versus cheaper heat exchange system, and marketable by-product versus more plant expense. |

Some common design trade-offs are more separations equipment versus low product purity, more recycle costs versus increased feed use and increased waste, more heat recovery versus cheaper heat exchange system, and marketable by-product versus more plant expense. |

||

==Parametric Optimization== |

==Parametric Optimization== |

||

Parametric optimization deals with process operating variables and equipment design variables other than those strictly related to structural concerns. Some of the more obvious examples of such decisions are operating conditions, recycle ratios, and steam properties such as flow rates and compositions. Small changes in these conditions or equipment can have a diverse impact on the system, causing parametric optimization problems to contain hundreds of decision variables. It is therefore more efficient to analyze the more influential variables effect on the overall system. Done properly, a balance is struck between increased difficulty of high-variable-number optimization and optimization accuracy. |

Parametric optimization deals with process operating variables and equipment design variables other than those strictly related to structural concerns. Some of the more obvious examples of such decisions are operating conditions, recycle ratios, and steam properties such as flow rates and compositions. Small changes in these conditions or equipment can have a diverse impact on the system, causing parametric optimization problems to contain hundreds of decision variables. It is therefore more efficient to analyze the more influential variables effect on the overall system. Done properly, a balance is struck between increased difficulty of high-variable-number optimization and optimization accuracy (Seider et al., 2004). |

||

==Suboptimizations== |

==Suboptimizations== |

||

Simultaneous optimization of the many parameters present in a chemical process design can be a daunting task due to the large number of variables that can be present in both integer and continuous form, the non-linearity of the property prediction relationships and performance models, and frequent ubiquity of recycle. It is therefore common to seek out suboptimizations for some of the variables, so as to reduce the dimensionality of the problem |

Simultaneous optimization of the many parameters present in a chemical process design can be a daunting task due to the large number of variables that can be present in both integer and continuous form, the non-linearity of the property prediction relationships and performance models, and frequent ubiquity of recycle. It is therefore common to seek out suboptimizations for some of the variables, so as to reduce the dimensionality of the problem (Seider et al., 2004). While optimizing sub-problems usually does not lead to overall optimum, there are instances for which it is valid in a practical, economic sense. Care must always be taken to ensure that subcomponents are not optimized at the expense of other parts of the plant. |

||

Equipment optimization is usually treated as a subproblem that is solved after the main process variables such as reactors conversion, recycle ratios, and product recoveries have been optimized. |

Equipment optimization is usually treated as a subproblem that is solved after the main process variables such as reactors conversion, recycle ratios, and product recoveries have been optimized. |

||

=Optimization of a Single Decision Variable= |

=Optimization of a Single Decision Variable= |

||

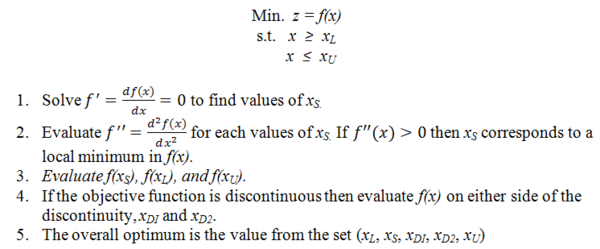

If the objective is a function of a single variable, x, the objective function f(x) can be differentiated with respect to x to give f’(x). The following algorithm summarizes the procedure: |

|||

[[File:SAO5.PNG|center|600px]] |

|||

Below is a graphical representation of the above algorithm. |

|||

[[File:SAO2.PNG|center|500px]] |

|||

Figure 2. Graphical Illustration of (a) Continuous Objective Function (b) Discontinuous Objective Function |

|||

In Figure2a, <math> x_L </math> is the optimum point, even though there is a local minimum at <math> x_S1 </math>; In Figure 2b, the optimum is at <math> x_DI </math>. |

|||

==Search Methods== |

==Search Methods== |

||

Search methods are at the core of the solution algorithms for complex multivariable objective functions. The four main search functions are unrestricted search, three-point interval search, golden-section search, and quasi-newton method (Towler and Sinnott, 2013). |

|||

===Unrestricted Search=== |

===Unrestricted Search=== |

||

Unrestricted Searching is a relatively simple method of bounding the optimum for problems that are not constrained. The first step is to determine a range in which the optimum lies by making an initial guess of x and assuming a step size, h. The direction of search that leads to improvement in the value of the objective is determined by z1, z2, and z3 where |

|||

===Three-Point Interval Search=== |

|||

===Golden-Section Search=== |

|||

<math> z_1 = f(x) </math> |

|||

===Quasi-Newton Method=== |

|||

<math> z_2 = f(x+h) </math> |

|||

<math> z_3 = f(x-h) </math> |

|||

The value of x is then increased or decreased by successive steps of h until the optimum is passed. In engineering design problems it is almost always possible to state upper and lower bounds for every parameter, so unrestricted search methods are not widely used in design. |

|||

The three-point interval is done as follows: |

|||

# Evaluate f(x) at the upper and lower bounds, <math> x_L </math> and <math> x_U </math>, and at the center point, <math> \frac {x_L+ x_U} {2} </math>. |

|||

# Two new points are added in the midpoints between the bounds and the center point, at <math> \frac {3x_L + x_U} {4} </math> and <math> \frac {x_L + 3x_U} {4} </math> |

|||

# The three adjacent points with the lowest values of f(x) (or the highest values for a maximization problem) are then used to define the next search range. |

|||

By eliminating two of the four quarters of the range at each step, this procedure reduces the range by half each cycle. To reduce the range to a fraction ε of the initial range therefore takes n cycles, where <math> \epsilon = 0.5^n </math>. Since each cycle requires calculating f (x) for two additional points, the total number of calculations is <math> 2n = \frac {2 log \epsilon} {log 0.5} </math>. |

|||

The procedure is terminated when the range has been reduced sufficiently to give the desired precision in the optimum. For design problems it is usually not necessary to specify the optimal value of the decision variables to high precision, so <math> \epsilon </math> is usually not a very small number. |

|||

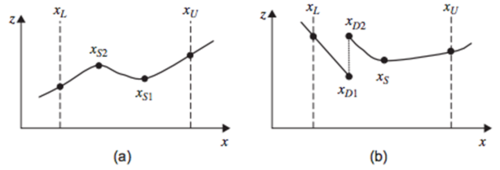

The golden-section search is more computationally efficient than the three-point interval method if <math> \epsilon < 0.29 </math>. In the golden-section search only one new point is added at each cycle. The golden-section method is illustrated in Figure 3. |

|||

[[File:SAO3.PNG|center|500px]] |

|||

Figure 3: Golden-Section Method |

|||

We start by evaluating <math> f(x_L) </math> and <math> f(x_U) </math> corresponding to the upper and lower bounds of the range, labeled A and B in the figure. We then add two new points, labeled C and D, each located a distance ωAB from the bounds A and B, i.e., located at |

|||

[[File:SAO6.PNG|left|100px]] |

|||

and |

|||

[[File:SAO7.PNG|left|100px]] |

|||

For a minimization problem, the point that gives the highest value of f(x) is eliminated. In Figure 3, this is point B. A single new point, E, is added, such that the new set of points AECD is symmetric with the old set of points ACDB. For the new set of points to be symmetric with the old set of points, |

|||

<math> AE = CD = </math>ω<math>AD </math> |

|||

But we know |

|||

<math> DB = </math>ω<math>AB </math> |

|||

so |

|||

<math> AD = (1 - </math>ω<math>)AB </math> |

|||

<math> CD = (1 - 2</math>ω<math>)AB </math> |

|||

<math> (1 - 2</math>ω<math>) = </math>ω<math> (1- </math>ω<math>) </math> |

|||

<math> </math>ω<math> = \frac{(3 \pm \sqrt{5})} {2} </math> |

|||

Each new point reduces the range to a fraction 1 − ω = 0.618 of the original range. To reduce the range to a fraction <math> \epsilon </math> of the initial range therefore requires <math> n = \frac {log \epsilon} {log 0.618} </math> function evaluations. The number 1 − ω is known as the golden mean. |

|||

The Quasi-Newton method is a super-linear search method that seeks the optimum by solving f’(x) and f’’(x) and searching for where f’(x) = 0. The value of x at step k + 1 is calculated from the value of x at k using |

|||

<math> x_(k+1) = x_k - \frac {f'(x_k)} {f''(x_k)} </math> |

|||

and the procedure is repeated until <math> (xk+1 - xk) </math> is less than a convergence tolerance, <math> \epsilon </math>. |

|||

If we do not have explicit formulate for f’(x) and f’’(x), then we can make a finite difference approximation about a point: |

|||

<math> x_(k+1) = x_k - \frac {\frac {f(x_k+h)-f(x_k-h)} {2h}} {(f(x_k+h)- 2f(x) + \frac {f(x_k - h)} {h^2} )} </math> |

|||

The Quasi-Newton method generally gives fast convergence unless f’’(x) is close to zero, in which convergence is poor. |

|||

All of the methods discussed in this section are best suited for unimodal functions, functions with no more than one maximum or minimum within the bounded range. |

|||

=Optimization of Two or More Decision Variables= |

=Optimization of Two or More Decision Variables= |

||

A two-variable optimization method can be solved in one of the following ways: |

|||

#Convexity: Solve graphically using constraint boundaries. |

|||

#Searching in two dimensions: Extensions of the methods used for single variable line searchers |

|||

#Probabilistic Methods |

|||

Multivariable optimization is much harder to visualize in the parameter space, but the same issues of initialization, convergence, convexity, and local optima are faces. The best means to optimize systems with multiple variables is an area researched today. |

|||

Some of methods are listed below: |

|||

#Linear programming |

|||

#Nonlinear Programming (NLP) |

|||

##Successive Linear Programming (SLP) |

|||

##Successive Quadratic Programming (SQP) |

|||

##Reduced Gradient Method |

|||

#Mixed Integer Programming |

|||

##Superstructure Optimization |

|||

Seider gives simple case studies on how to solve an NLP using ASPEN PLUS and HYSYS, beginning with simulation model of the process to be optimized and simple case studies in which the objective function is evaluated with using an automated optimization algorithm. |

|||

=Optimization in Industrial Practice= |

=Optimization in Industrial Practice= |

||

Many of the methods listed above are used industrially, especially linear programming and mixed-integer linear programming, to optimize logistics, supply-chain management, and economic performance. The specifics are traditionally a topic for industrial engineers. |

|||

==Optimization in Process Design== |

==Optimization in Process Design== |

||

Few industrial designs are rigorously optimized because: |

|||

#The errors introduced by uncertainty in process models may be larger than the differences in performance predicted for different designs. Thus rendering the models ineffective. |

|||

#Price uncertainty usually dominated the difference between design alternatives. |

|||

#A substantial amount of design work foes into cost estimates, and revisiting these design decisions at a later stage is usually not justified. |

|||

#Safety, operability, reliability, and flexibility are top priorities in design. A safe, operable, plant will often require be more expensive then the economically optimal design. |

|||

Experienced design engineers usually think through constraints, trade-offs, major cost components, and the objective function to satisfy themselves that their design is “good enough” (Towler and Sinnott, 2013). |

|||

=Sensitivity Analysis= |

=Sensitivity Analysis= |

||

A sensitivity analysis is a way of examining the effects of uncertainties in the forecasts on the viability of a project (Towler and Sinnott, 2013). First a base case for analysis is established from the investment and cash flows. Various parameters in the cost model are then modified, measuring the range of error in the forecast figures; this shows how sensitive the cash flows and economic criteria are to errors in the forecast figures. The results are usually presented as plots of economic criterion, and give some idea of the risk involved in making judgments on the forecast performance of the project. |

|||

==Parameters to Study== |

==Parameters to Study== |

||

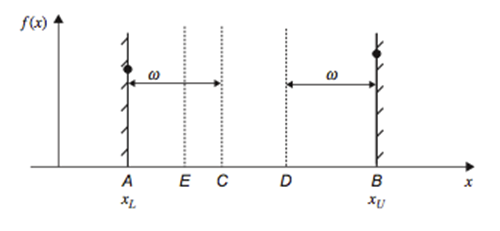

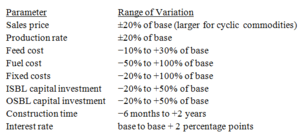

The purpose of sensitivity analysis is to identify the parameters that have a significant impact on project viability over the expected range of variation of the parameter. Table 1 contains typical parameters and their range of variation. |

|||

[[File:SAO4.PNG|center|300px]] |

|||

Table 1: Parameters to study in sensitivity analysis |

|||

==Statistical Methods of Risk Analysis== |

==Statistical Methods of Risk Analysis== |

||

In formal methods of risk analysis statistical methods are used to examine the effect of variation in all parameters. A simple method described in Towler uses estimates based on the most likely value, upper value, and lower value: ML, H, and L respectively. The upper and lower values are estimated from Table 1, and the mean and standard deviation are then estimated as |

|||

<math> \bar{x} = \frac {H + 2ML + L} {4} </math> |

|||

<math> S_x = \frac {H-L} {2.65} </math> |

|||

It is important to recognize that values of this statistical method will likely be skewed if the distribution is incorrect. The mean and standard deviation of other parameters can be calculated as a function of the equations above. This allows relatively easy estimation of the overall error in a completed cost estimate, and can be extended to economic criteria such as NPV, TAC, or ROI. Commercial programs are available for more sophisticated analyses such as the Monte Carlo method (Towler and Sinnott, 2013). |

|||

==Contingency Costs== |

==Contingency Costs== |

||

A minimum contingency charge of 10% is normally added to ISBL plus OSBL fixed capital to account for variations in capital cost. If the confidence interval of the estimate is known, the contingency charges can be estimated based on the desired level of certainty that the project will not exceed projected costs. |

|||

=Conclusion= |

|||

Design optimization and sensitivity analysis are essential to designing and operating a successful chemical process. Optimization can be tricky due to high levels of uncertainty and magnitude of variables, but can help minimize costs and increase efficiency. Chemical engineers need to understand the optimization methods, the role of constraints in limiting designs, recognize design trade-offs, and understand the pitfalls of their analysis. In a similar respect, sensitivity analysis is a way of examining the effects of uncertainties in the forecasts on the viability of a project. If an engineer can optimize a process and perform a sensitivity analysis, the project will be cost effective and run more smoothly. |

|||

=References= |

|||

Peters MS, Timmerhaus KD. Plant Design and Economics for Chemical Engineers. 5th ed. New York: McGraw Hill; 2003. |

|||

Seider WD, Seader JD, Lewin DR. Process Design Principles: Synthesis, Analysis, and Evaluation. 3rd ed. New York: Wiley; 2004. |

|||

Towler G. Chemical Engineering Design. PowerPoint presentation; 2012. |

|||

Towler G, Sinnott R. Chemical Engineering Design: Principles, Practice and Economics of Plant and Process Design. 2nd ed. Boston: Elsevier; 2013. |

|||

Turton R, Bailie RC, Whiting WB, Shaewitz JA, Bhattacharyya D. Analysis, Synthesis, and Design of Chemical Processes. 4th ed. Upper Saddle River: Prentice-Hall; 2012. |

|||

Ulrich GD. A Guide to Chemical Engineering Process Design and Economics. New York: Wiley; 1984. |

|||

Latest revision as of 22:19, 1 March 2015

Author: Anne Disabato, Tim Hanrahan, Brian Merkle

Steward: David Chen, Fengqi You

Date Presented: February 23, 2014

Introduction

Optimization and sensitivity analysis are key aspects of successful process design. Optimizing a process maximizes project value and plant performance, minimizes project cost, and facilitates the selection of the best components (Towler and Sinnott, 2013).

Design Optimization

Economic optimization is the process of finding the condition that maximizes financial return or, conversely, minimizes expenses. The factors affecting the economic performance of the design include the types of processing technique and equipment used, arrangement, and sequencing of the processing equipment, and the actual physical parameters for the equipment. The operating conditions are also of prime concern.

Optimization of process design follows the general outline below:

- Establish optimization criteria: using an objective function that is an economic performance measure.

- Define optimization problem: establish various mathematical relations and limitations that describe the aspects of the design

- Design a process model with appropriate cost and economic data

Although profitability or cost is generally the basis for optimization, practical and intangible factors usually need to be included as well in the final investment decision. Such factors are often difficult or impossible to quantify, and so decision maker judgment must weigh such factors in the final analysis (Peters and Timmerhaus, 2003; Ulrich, 1984).

Defining the Optimization Problem and Objective Function

In optimization, we seek to maximize or minimize a quantity called the goodness of design or objective function, which can be written as a mathematical function of a finite number of variables called the decision variables.

The decision variables may be independent or they may be related via constraint equations. Examples of process variables include operating conditions such as temperature and pressure, and equipment specifications such as the number of trays in a distillation column. The conventional name and strategy of this optimization method varies between texts; Turton et al suggests creating a base case prior to defining the objective function and Seider et al classifies the objective function as a piece of a nonlinear program (NLP) (Seider et al., 2004; Turton et al., 2012).

A second type of process variable is the dependent variable; a group of variables influenced by process constraints. Common examples of process constraints include process operability limits, reaction chemical species dependence, and product purity and production rate. Towler & Sinnott define equality and inequality constraints (Towler, 2012). Equality constraints are the laws of physics and chemistry, design equations, and mass/energy balances:

For example, a distillation column that is modeled with stages assumed to be in phase equilibrium often has several hundred MESH (material balance, equilibrium, summation of mole fractions, and heat balance) equations. However, in the implementation of most simulators, these equations are solved for each process unit, given equipment parameters and steam variables. Hence, when using these simulators, the equality constraints for the process units are not shown explicitly in the nonlinear program. Given values for the design variables, the simulators call upon these subroutines to solve the appropriate equations and obtain the unknowns that are needed to perform the optimization (Seider et al., 2004).

Inequality constraints are technical, safety, and legal limits, economic and current market:

Inequality constraints also pertain to equipment; for example, when operating a centrifugal pump, the head developed is inversely related to the throughput. Hence, as the flow rate is varied when optimizing the process, care must be taken to make sure that the required pressure increase does not exceed that available from the pump (Seider et al., 2004).

It is important that a problem is not under or over-constrained so a possible solution is attainable. A degree-of-freedom (DOF) analysis should be completed to simply the number of process variables, and determine if the system is properly specified.

Trade-Offs

A part of optimization is assessing trade-offs; usually getting better performance from equipment means higher cost. The objective function must capture this trade-off between cost and benefit.

Example: Heat Recovery, total cost captures trade-off between energy savings and capital expense.

Figure 1: Trade-off example (Towler and Sinnott, 2013)

Some common design trade-offs are more separations equipment versus low product purity, more recycle costs versus increased feed use and increased waste, more heat recovery versus cheaper heat exchange system, and marketable by-product versus more plant expense.

Parametric Optimization

Parametric optimization deals with process operating variables and equipment design variables other than those strictly related to structural concerns. Some of the more obvious examples of such decisions are operating conditions, recycle ratios, and steam properties such as flow rates and compositions. Small changes in these conditions or equipment can have a diverse impact on the system, causing parametric optimization problems to contain hundreds of decision variables. It is therefore more efficient to analyze the more influential variables effect on the overall system. Done properly, a balance is struck between increased difficulty of high-variable-number optimization and optimization accuracy (Seider et al., 2004).

Suboptimizations

Simultaneous optimization of the many parameters present in a chemical process design can be a daunting task due to the large number of variables that can be present in both integer and continuous form, the non-linearity of the property prediction relationships and performance models, and frequent ubiquity of recycle. It is therefore common to seek out suboptimizations for some of the variables, so as to reduce the dimensionality of the problem (Seider et al., 2004). While optimizing sub-problems usually does not lead to overall optimum, there are instances for which it is valid in a practical, economic sense. Care must always be taken to ensure that subcomponents are not optimized at the expense of other parts of the plant.

Equipment optimization is usually treated as a subproblem that is solved after the main process variables such as reactors conversion, recycle ratios, and product recoveries have been optimized.

Optimization of a Single Decision Variable

If the objective is a function of a single variable, x, the objective function f(x) can be differentiated with respect to x to give f’(x). The following algorithm summarizes the procedure:

Below is a graphical representation of the above algorithm.

Figure 2. Graphical Illustration of (a) Continuous Objective Function (b) Discontinuous Objective Function

In Figure2a, is the optimum point, even though there is a local minimum at ; In Figure 2b, the optimum is at .

Search Methods

Search methods are at the core of the solution algorithms for complex multivariable objective functions. The four main search functions are unrestricted search, three-point interval search, golden-section search, and quasi-newton method (Towler and Sinnott, 2013).

Unrestricted Search

Unrestricted Searching is a relatively simple method of bounding the optimum for problems that are not constrained. The first step is to determine a range in which the optimum lies by making an initial guess of x and assuming a step size, h. The direction of search that leads to improvement in the value of the objective is determined by z1, z2, and z3 where

The value of x is then increased or decreased by successive steps of h until the optimum is passed. In engineering design problems it is almost always possible to state upper and lower bounds for every parameter, so unrestricted search methods are not widely used in design.

The three-point interval is done as follows:

- Evaluate f(x) at the upper and lower bounds, Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_L } and Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_U } , and at the center point, .

- Two new points are added in the midpoints between the bounds and the center point, at Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac {3x_L + x_U} {4} } and

- The three adjacent points with the lowest values of f(x) (or the highest values for a maximization problem) are then used to define the next search range.

By eliminating two of the four quarters of the range at each step, this procedure reduces the range by half each cycle. To reduce the range to a fraction ε of the initial range therefore takes n cycles, where Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon = 0.5^n } . Since each cycle requires calculating f (x) for two additional points, the total number of calculations is Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2n = \frac {2 log \epsilon} {log 0.5} } . The procedure is terminated when the range has been reduced sufficiently to give the desired precision in the optimum. For design problems it is usually not necessary to specify the optimal value of the decision variables to high precision, so is usually not a very small number.

The golden-section search is more computationally efficient than the three-point interval method if Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon < 0.29 } . In the golden-section search only one new point is added at each cycle. The golden-section method is illustrated in Figure 3.

Figure 3: Golden-Section Method

We start by evaluating and Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_U) } corresponding to the upper and lower bounds of the range, labeled A and B in the figure. We then add two new points, labeled C and D, each located a distance ωAB from the bounds A and B, i.e., located at

and

For a minimization problem, the point that gives the highest value of f(x) is eliminated. In Figure 3, this is point B. A single new point, E, is added, such that the new set of points AECD is symmetric with the old set of points ACDB. For the new set of points to be symmetric with the old set of points,

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle AE = CD = } ωFailed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle AD }

But we know

ωFailed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle AB }

so

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle AD = (1 - } ω

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle CD = (1 - 2} ωFailed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle )AB }

ωωωFailed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ) }

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle } ω

Each new point reduces the range to a fraction 1 − ω = 0.618 of the original range. To reduce the range to a fraction Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon }

of the initial range therefore requires Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n = \frac {log \epsilon} {log 0.618} }

function evaluations. The number 1 − ω is known as the golden mean.

The Quasi-Newton method is a super-linear search method that seeks the optimum by solving f’(x) and f’’(x) and searching for where f’(x) = 0. The value of x at step k + 1 is calculated from the value of x at k using

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_(k+1) = x_k - \frac {f'(x_k)} {f''(x_k)} }

and the procedure is repeated until Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (xk+1 - xk) } is less than a convergence tolerance, . If we do not have explicit formulate for f’(x) and f’’(x), then we can make a finite difference approximation about a point:

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_(k+1) = x_k - \frac {\frac {f(x_k+h)-f(x_k-h)} {2h}} {(f(x_k+h)- 2f(x) + \frac {f(x_k - h)} {h^2} )} }

The Quasi-Newton method generally gives fast convergence unless f’’(x) is close to zero, in which convergence is poor.

All of the methods discussed in this section are best suited for unimodal functions, functions with no more than one maximum or minimum within the bounded range.

Optimization of Two or More Decision Variables

A two-variable optimization method can be solved in one of the following ways:

- Convexity: Solve graphically using constraint boundaries.

- Searching in two dimensions: Extensions of the methods used for single variable line searchers

- Probabilistic Methods

Multivariable optimization is much harder to visualize in the parameter space, but the same issues of initialization, convergence, convexity, and local optima are faces. The best means to optimize systems with multiple variables is an area researched today.

Some of methods are listed below:

- Linear programming

- Nonlinear Programming (NLP)

- Successive Linear Programming (SLP)

- Successive Quadratic Programming (SQP)

- Reduced Gradient Method

- Mixed Integer Programming

- Superstructure Optimization

Seider gives simple case studies on how to solve an NLP using ASPEN PLUS and HYSYS, beginning with simulation model of the process to be optimized and simple case studies in which the objective function is evaluated with using an automated optimization algorithm.

Optimization in Industrial Practice

Many of the methods listed above are used industrially, especially linear programming and mixed-integer linear programming, to optimize logistics, supply-chain management, and economic performance. The specifics are traditionally a topic for industrial engineers.

Optimization in Process Design

Few industrial designs are rigorously optimized because:

- The errors introduced by uncertainty in process models may be larger than the differences in performance predicted for different designs. Thus rendering the models ineffective.

- Price uncertainty usually dominated the difference between design alternatives.

- A substantial amount of design work foes into cost estimates, and revisiting these design decisions at a later stage is usually not justified.

- Safety, operability, reliability, and flexibility are top priorities in design. A safe, operable, plant will often require be more expensive then the economically optimal design.

Experienced design engineers usually think through constraints, trade-offs, major cost components, and the objective function to satisfy themselves that their design is “good enough” (Towler and Sinnott, 2013).

Sensitivity Analysis

A sensitivity analysis is a way of examining the effects of uncertainties in the forecasts on the viability of a project (Towler and Sinnott, 2013). First a base case for analysis is established from the investment and cash flows. Various parameters in the cost model are then modified, measuring the range of error in the forecast figures; this shows how sensitive the cash flows and economic criteria are to errors in the forecast figures. The results are usually presented as plots of economic criterion, and give some idea of the risk involved in making judgments on the forecast performance of the project.

Parameters to Study

The purpose of sensitivity analysis is to identify the parameters that have a significant impact on project viability over the expected range of variation of the parameter. Table 1 contains typical parameters and their range of variation.

Table 1: Parameters to study in sensitivity analysis

Statistical Methods of Risk Analysis

In formal methods of risk analysis statistical methods are used to examine the effect of variation in all parameters. A simple method described in Towler uses estimates based on the most likely value, upper value, and lower value: ML, H, and L respectively. The upper and lower values are estimated from Table 1, and the mean and standard deviation are then estimated as

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \bar{x} = \frac {H + 2ML + L} {4} }

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle S_x = \frac {H-L} {2.65} }

It is important to recognize that values of this statistical method will likely be skewed if the distribution is incorrect. The mean and standard deviation of other parameters can be calculated as a function of the equations above. This allows relatively easy estimation of the overall error in a completed cost estimate, and can be extended to economic criteria such as NPV, TAC, or ROI. Commercial programs are available for more sophisticated analyses such as the Monte Carlo method (Towler and Sinnott, 2013).

Contingency Costs

A minimum contingency charge of 10% is normally added to ISBL plus OSBL fixed capital to account for variations in capital cost. If the confidence interval of the estimate is known, the contingency charges can be estimated based on the desired level of certainty that the project will not exceed projected costs.

Conclusion

Design optimization and sensitivity analysis are essential to designing and operating a successful chemical process. Optimization can be tricky due to high levels of uncertainty and magnitude of variables, but can help minimize costs and increase efficiency. Chemical engineers need to understand the optimization methods, the role of constraints in limiting designs, recognize design trade-offs, and understand the pitfalls of their analysis. In a similar respect, sensitivity analysis is a way of examining the effects of uncertainties in the forecasts on the viability of a project. If an engineer can optimize a process and perform a sensitivity analysis, the project will be cost effective and run more smoothly.

References

Peters MS, Timmerhaus KD. Plant Design and Economics for Chemical Engineers. 5th ed. New York: McGraw Hill; 2003.

Seider WD, Seader JD, Lewin DR. Process Design Principles: Synthesis, Analysis, and Evaluation. 3rd ed. New York: Wiley; 2004.

Towler G. Chemical Engineering Design. PowerPoint presentation; 2012.

Towler G, Sinnott R. Chemical Engineering Design: Principles, Practice and Economics of Plant and Process Design. 2nd ed. Boston: Elsevier; 2013.

Turton R, Bailie RC, Whiting WB, Shaewitz JA, Bhattacharyya D. Analysis, Synthesis, and Design of Chemical Processes. 4th ed. Upper Saddle River: Prentice-Hall; 2012.

Ulrich GD. A Guide to Chemical Engineering Process Design and Economics. New York: Wiley; 1984.